Security

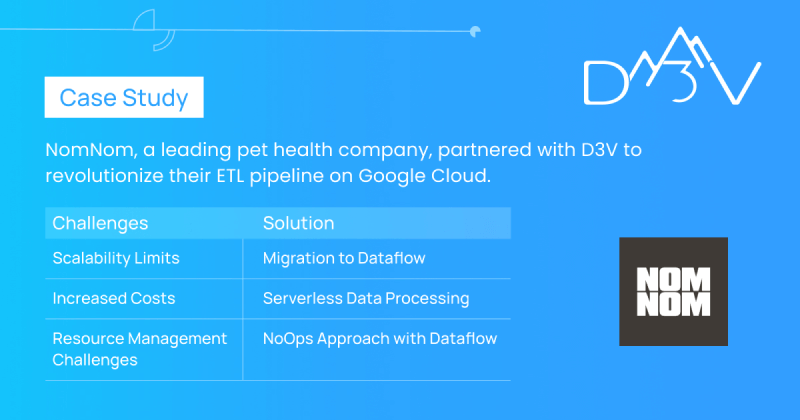

NomNom, A Pet Health & Retail Company, Partners With D3V To Automate And Scale Their Data Pipeline With Dataflow On Google Cloud

Overview

NomNom is a pet health company dedicated to improving the health and lives of dogs. They use specific algorithms set in place by scientists and Board Certified Veterinary Nutritionists to calculate tailor-made meals for pets and deliver them to the doorstep.

The company had an ETL pipeline setup on Google Colab notebook, but it reached its scalability limits. Their method used Google Cloud Engine (GCE) VMs which has its limitations. Thus, they urgently needed to move their pipeline to a better-managed system on Google Cloud to handle these operational issues. NomNom partnered with D3V to migrate their ETL pipeline hosted on Google Compute Engine VMs to a serverless system, thereby optimizing resource management and reducing operational costs.

The Challenge

NomNom’s business infrastructure warrants a widespread client information and analytics database. While the company already had an ETL pipeline setup on Google Colab as their local environment, these VMs have caps on instance size. Whenever the VM would reach its maximum capacity, it would mean resizing the CPU, increased costs, and operational overhead.

To address this problem, NomNom wanted to upgrade their pipeline with a more capable & powerful system needing minimal maintenance. However, migration and setting up of a data pipeline can become complicated. It requires overhead resources such as choosing an environment that is more capable and compatible with the colab notebook, installing python tools & managing dependencies and more significantly, it can be difficult to troubleshoot pipeline errors. Thus, to tackle these issues effectively, NomNom wanted to partner with D3V and leverage the fully managed, serverless offerings from Google Cloud with the best possible strategy.

Our Solution

The NomNom team had several meetings with the experts at D3V to discuss the requirements and needs, as moving away from colab meant migrating the pipeline code to adhere to the new environment while ensuring the pipeline transformations stay the same. D3V’s certified data engineer experts weighed in the pros and cons of each option available to upgrade the pipeline and decided to choose Dataflow as the best-suited solution.

Cloud Dataflow service is ideal for processing batch data and unified streaming of ETL jobs in a serverless, fast, and cost-effective way. Dataflow also comes with additional benefits to monitor pipelines using an execution graph. Moreover, it offers parallel distributed processing with a NoOps approach. It is a serverless implementation of the Apache Beam Model.

Our certified engineers at D3V migrated the data transformations logic from the Collab notebook to Apache Beam model in order to run the pipeline on Dataflow. The team had to review and ensure the changes made to comply with the Apache Beam model from the collab notebook did not affect the nature or output of the transformations and thoroughly tested to ensure all the transformations worked as expected.Thus, our team worked towards fulfilling the client’s most vital requirement – to take a BigQuery dataset as input, apply transformations using a scalable and powerful self sustaining compute with Dataflow and write output data to BigQuery, without having to manage or worry about any compute limitations they had faced with the Collab Notebook.

Some of the benefits of this new system include the following:

- Reduction in operational overhead due to Dataflow’s serverless stream and batch data processing.

- It’s a cost-effective system, as it only uses required resources when the pipeline is running and as it is managed by Google Cloud it doesn’t require additional expenditures and upgrades on a new set of tools periodically.

- Since Dataflow is based on the open-source Apache Beam SDK, it is portable, unified, and extensible.

- Dataflow provides pipeline monitoring by providing an execution graph that further makes it easy to troubleshoot any pipeline errors.

Key Accomplishments

By the end of the project, our team at D3V had successfully migrated NomNom’s ETL pipeline from Google Colab to the Dataflow service of Google Cloud. We followed best practices and remained in constant communication with the client to understand all their requirements. Our team delivered quality through thorough testing, attention to detail and ensured we met our goals within the timeline estimated for the project.

Moreover, our expert Google Cloud architects leveraged Google Cloud to create a future-proof system. This new system reduces operational costs, is capable of scaling automatically, and makes troubleshooting pipelines far easier.